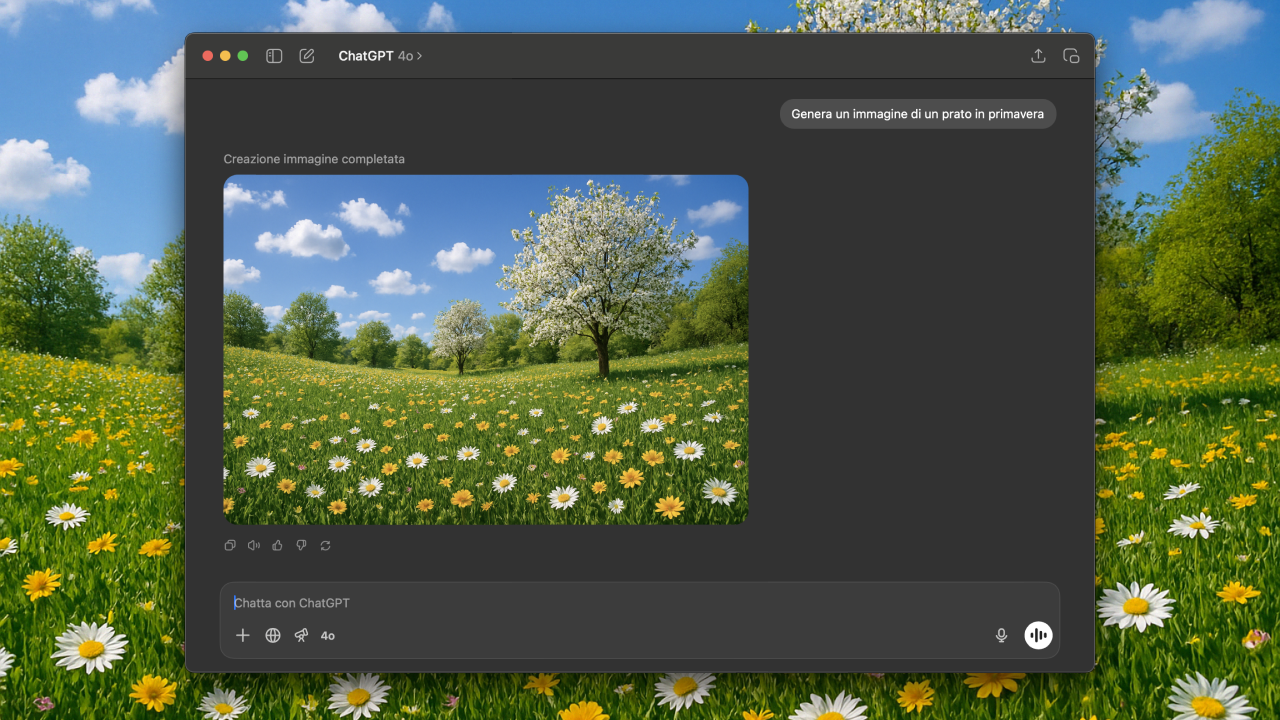

Artificial intelligence and energy together could revolutionise the energy sector. How? What are the predictions for the future?

Strategically integrated, artificial intelligence and energy could revolutionise the energy sector in every way, from optimising existing structures to innovating in crucial technological areas. In this article, we will analyse the current situation, experts’ predictions for the future and the challenges that this interaction will inevitably face.

Artificial intelligence and energy: why is reflection necessary?

Artificial intelligence and energy must be considered together, as two sides of the same coin, due to their dual and symbiotic relationship: AI needs energy, and therefore the energy sector needs the potential of AI to evolve and innovate in a context of constantly increasing demand.

The relevance of the topic is such that the IEA (International Energy Agency), an intergovernmental organisation working for global energy security and the promotion of sustainable energy policies, published a report in April 2025 entitled ‘Energy and AI‘. In these 304 pages, the aim is to demonstrate to the world a very clear thesis: the revolutionary potential of artificial intelligence must be exploited to maximise innovation and efficiency in a strategic sector such as energy. This integration, says the IEA, is essential to optimise, rethink and renew a system that, day after day, must meet the growing needs of the population, industry and services.

Now that the reasons are clear, it is time to delve deeper to answer specific questions: how much do AI data centres consume – and will they consume in the future? How will demand be met? Furthermore, how can AI help the energy sector? What will be the main challenges? Let’s see how the IEA experts responded.

Why does artificial intelligence need the energy sector?

The answer to this question, as you might guess, is simple: because it consumes – a lot – and will consume more and more as it becomes more widespread in various areas of daily life. To put it another way, AI could represent a revolution comparable to the discovery of electricity, precisely because of its status as a general-purpose technology. Apparently, Wall Street is well aware of this, given that between the launch of ChatGPT in November 2022 and the end of 2024, approximately 65% of the growth in the market cap of the S&P 500 is attributable to companies linked to artificial intelligence. This percentage is roughly equivalent to $12 trillion (twelve thousand billion) – also worth noting is the interest in the Crypto AI category, as in the case of Grayscale.

As in the most classic of circular dynamics, such a massive injection of capital has triggered an investment rush, with major tech companies planning to spend up to $300 billion on artificial intelligence-related assets, facilities and equipment in 2025 alone. Of course, much of this funding is absorbed by data centres, which are essential for the training and implementation of AI, but are extremely energy-intensive.

How much do data centres consume?

Data centres, defined as a complex of servers and storage systems for data processing and storage, currently account for around 1.5% of global electricity consumption, or 415 TWh (terawatt hours): a data centre designed for AI, for example, can require the same amount of electricity as 100,000 average households, while those under construction – significantly larger – could be up to 20 times that amount.

Looking ahead, from 2017 to today, data centres have increased their electricity consumption by 12%, which is four times faster than total global consumption. This means that if the planet Earth has increased its electricity demand by 3% since 2017, data centres have required four times that rate of growth. Needless to say, the most important driver of this increase is artificial intelligence, followed by digital services, which are also in high demand. In all this, the IEA reports that, in 2024, the top three global consumers will be the United States (with 45% of the total), followed by China (25%) and the European Union (15%).

So, if data centre consumption currently stands at 415 TWh, the IEA report estimates that this figure will double by 2030, reaching around 945 TWh, slightly more than the total consumption of Japan. As for projections for 2035, the report refers to a ‘scissors effect’, as it includes variables related to the development of efficient energy-saving solutions in its calculations. In any case, the range is from a minimum of 700 TWh to a maximum of 1,700 TWh.

This incredible increase is linked both to the greater ‘physical presence’ of data centres around the world and to their intensified use, assuming that, in the future, AI will spread to every corner of the cities in which we live. In fact, in terms of consumption, the most significant impact is during the operating phase rather than during production or configuration: a latest-generation 3-nanometre chip requires approximately 2.3 MWh (megawatt hours) per wafer – the circular slice of silicon on which the circuits are manufactured – to be produced, 10 MWh to be configured and 80 MWh to operate during a five-year life cycle.

How can this demand be met in the future?

The report answers in the only way possible, namely with a diversified range of energy sources. In particular, in the baseline scenario – obtained from an analysis of current conditions, without including optimistic or pessimistic variables – renewables and natural gas should drive this energy mix, with the former covering about half of demand (450 TWh) and the latter accounting for almost a quarter (175 TWh). Next comes nuclear energy, which, with the implementation of small modular reactors (SMRs), could contribute slightly less than natural gas.

Let’s now shift our focus to the energy sector.

Why does the energy sector need artificial intelligence?

Because, as is evident, artificial intelligence is capable of optimising every aspect of the energy sector: exploration, production, maintenance, safety and distribution. In short, applying AI to the energy sector, as we mentioned at the beginning of this article, could revolutionise it. Let’s look at some specific cases:

AI and energy together in the oil and gas industry

The report informs us that in this area, the adoption of the winning combination of artificial intelligence and energy has occurred ahead of the average. The main uses relate to the optimisation of reservoir exploration and identification processes, the automation of hydrocarbon extraction activities – well management, flow control and fluid separation – but also everything related to safety and maintenance: leak detection, preventive maintenance and emission reduction. In the future, the IEA reports, this integration could translate into a 10% saving in operating costs in deep waters.

Artificial intelligence in the electricity sector

In the field of electricity, the IEA report predicts that AI will play a key role in balancing networks, which are becoming increasingly digitised and decentralised – as is the case with rooftop solar panels. Specifically, AI could improve the forecasting and integration of renewable energy generation by reducing curtailment – forced reduction – and, therefore, emissions. In simple terms, this means that artificial intelligence, thanks to its ability to analyse endless series of data, would be able to make more accurate predictions about renewable energy production (which is influenced by the weather) and average demand. This would make it possible to integrate renewable energy with other energy sources in a more precise and intelligent way, avoiding unnecessary waste associated with the arbitrary blocking of excess electricity (curtailment).

There is also an interesting issue related to increasing the efficiency of existing networks. In a nutshell, integrating AI would unlock up to 175 GW (gigawatts). How? Through the use of remote sensors and management tools capable of reading and processing huge amounts of data in real time. Currently, electricity grids – or transmission lines – carry a maximum amount of electricity based on static and conservative conditions, calculated with a very wide safety margin: during the summer, for example, air temperature and wind are measured conservatively to prevent excessive electrical flow from causing cables to melt or similar problems. The result is that, most of the time, networks operate at low capacity. With AI-based management, these conditions would change from static to dynamic – Dynamic Line Rating, DLR – and allow real-time control of the load capacity of the networks themselves, with positive effects on the amount of energy circulating.

Finally, artificial intelligence applied to the electricity sector could make a concrete contribution to network fault detection and preventive maintenance of power plants. In the first case, by speeding up problem localisation operations, with a 30-50% reduction in outage duration. In the second, by optimising the identification of potential damage, giving advance warning of the need to replace crucial components, with estimated savings of $110 billion by 2035.

AI in industry, transport and building heating

To conclude this section, the report briefly touches on the three areas belonging to the macro-category of ‘end uses’, i.e. the uses to which energy is put after distribution to end users. With regard to industry, the IEA quantifies the benefits of implementing AI applications as savings equal to Mexico’s total consumption today. Then, in transport, it talks about cuts equivalent to the energy used by 120 million cars, thanks to traffic and route optimisation. Finally, AI could improve the management of heating systems in civil and non-civil buildings, with an expected reduction in electricity use of around 300 TWh – the amount produced by Australia and New Zealand in a year.

Artificial intelligence and energy: innovations

Artificial intelligence can contribute significantly to energy innovation as it is capable of rapidly searching for molecules that can improve existing tools. Thanks to the combination of predictive and generative models and endless academic literature, AI exponentially accelerates the process of selecting candidates and creating suitable prototypes. In particular, four key areas would benefit from the potential of AI:

- Cement production, making the research and development of new mixtures more efficient and reducing the use of clinker, a highly polluting component that forms the basis of cement itself.

- The search for CO2 capture materials, such as MOFs (Metal Organic Frameworks), reduces energy consumption and costs associated with CCUS (Carbon Capture, Utilisation and Storage, the process of capturing CO2 for reuse or storage.

- The design of catalysts for synthetic fuels, i.e. substances that accelerate chemical reactions to produce low-emission fuels. The difficulty in designing this type of catalyst lies in the infinite number of possible combinations between molecules, a process that AI can greatly accelerate.

- Battery research and development, facilitating material testing, performance prediction, production optimisation and end-of-life management processes.

What are the challenges of integrating AI and the energy sector?

The report concludes by presenting, as it should, the obstacles that this ambitious project will face. First, the IEA warns us that increasing digitalisation, while having positive implications for energy security, inevitably also brings with it specific risks, such as vulnerability to cyberattacks. A fundamental problem also concerns the security of energy supply chains: chips, as is well known, require large volumes of rare earths and critical minerals, which are concentrated in a few areas of the world – China controls 98% of gallium refining. A third issue relates to the decoupling of investment in data centres and investment in energy infrastructure, which is vital for the functioning of the system. Finally, there is the issue of the lack of digital skills and qualified personnel, coupled with poor dialogue between institutions, the tech sector and the energy sector.

I don’t know about you, but after reading and analysing this report, we are fairly convinced that artificial intelligence will also rule in this sector: burdens and honours, risks and opportunities. But then again, nothing ventured, nothing gained.